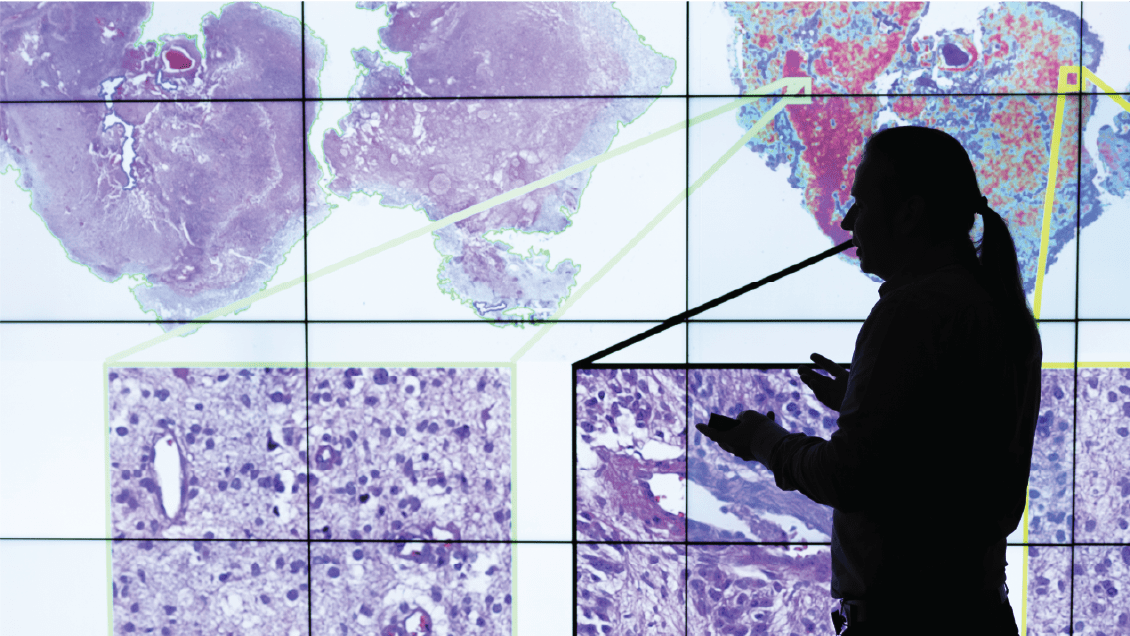

"IU offers unfettered access to the data of a third of all patients in the state, and we have a strong presence in data science and data analytics,” said Michael Feldman, MD, PhD, chair of the Department of Pathology and Laboratory Medicine and the Manwaring Professor of Pathology and Laboratory Medicine at the IU School of Medicine. "There's a huge opportunity to move this science forward."

The promise of the technology, already being realized, is to help researchers work faster and expand the scope of their gaze to thousands of patients across the country or around the world. It offers to do tedious jobs much quicker — sometimes converting weeks of human work into days, and days into hours, or minutes. It holds the promise to clinicians of less documentation and better follow-up care. For radiologists, a second read on images, reducing the likelihood the vital stuff isn't overlooked.

This raises the question: Will artificial intelligence make humans in medicine obsolete? The consensus at IU School of Medicine is a resounding no.

Huang, the chair in bioinformatics, said it's not a question of whether AI is better than humans. "If you ask AI to read a thousand papers, of course, it's going to be better — it's a computer," he said. "But on the other hand, if you really want AI to help you understand that and provide you something really explainable and accurate and understandable, then no."

Dana Mitchell, MD, MS, an assistant research professor of pediatrics, is actively training cutting-edge AI software to identify tumor tissue within the peripheral nervous system. When she's done, the program allows her to analyze a much larger number of samples more quickly than would have been possible if she or one of her technicians had to review such a large quantity of slides themselves.

ANOTHER WAY TO look at it is like a plane on autopilot, said Kevin L. Smith, MD, an assistant professor of clinical radiology and imaging sciences who leads the clinical AI program in radiology at IU Health. “Even as the autopilot is engaged, the human pilot validates that the system works as it should.”

And there is still plenty of work to do.

Algorithms are not built in isolation. They are trained on and analyze data extracted from a real and tactile world. It also means that tools intended to be objective are not entirely free of bias. As Grannis notes, AI models should behave in ways we expect and with reliability in results that can be replicated. Since much of the work is built on patient records, close attention must also be paid to confidentiality and privacy.

There are even issues with health disparities, Huang said. As AI is deployed in clinical settings, how widely will it be used, and which patients will have access to its benefits? Academic health centers and hospitals in wealthy communities are already employing it. But what about health care settings in lower-resource rural areas or low-income countries, such as Kenya? AI has the potential to bridge these gaps — giving radiologists in remote areas an AI backup read — or, in AI’s absence, to make disparities worse.

Pollok, who met Tyler Trent before his death and is now one of the caretakers of his tumor data, agrees. A biologist by training and experience, she claims to be learning bioinformatics — and AI — as she goes, despite evidence she's well-skilled. But Pollok is hopeful that, before she retires, her lab can push two potential therapies to clinical trials, including one for Tyler's disease, osteosarcoma.

If it happens, it's likely that it would have been made possible with the help of artificial intelligence.

Laura Gates and Matthew Harris contributed to this story.