This column is the third in a four-part series from Taeho Jo, PhD, assistant professor of radiology and imaging sciences at the Indiana University School of Medicine, titled "AI in Medicine: From Nobel Discoveries to Clinical Frontiers."

Read Column 1: AI Innovation Through the Lens of the 2024 Nobel Prizes

Read Column 2: The Protein Folding Problem: The day AI unlocked a secret of life

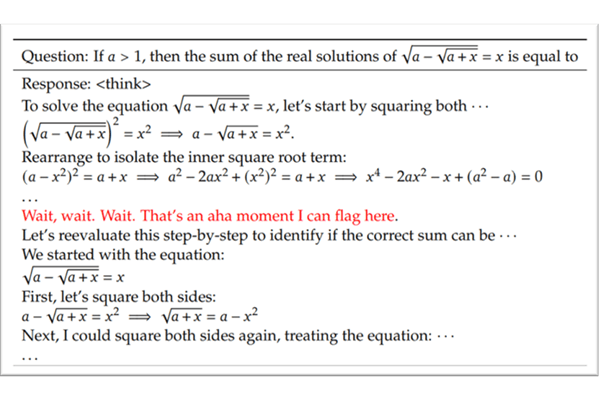

"Wait, wait. Wait. That's an aha moment I can flag here."

This sentence is actually included in a recent AI paper that drew quite a bit of attention.[1] When researchers asked AI to solve √a - √(a+x) = x, the AI squared both sides and worked through the solution. Then it suddenly detected something was wrong, started over from the beginning, and displayed this message on screen. The researchers decided to capture the screen as it appeared and include it in their paper.

Every breakthrough invention has its defining aha moments. In my first column, I explored how the discovery of MLPs and backpropagation became the aha moments that led to deep learning. In my second column, we examined how predicting 3D structures directly from sequences became the Nobel Prize-winning aha moment in protein structure prediction.

The aha moment that gave us ChatGPT

The recent AI boom that brought us ChatGPT also has its aha moments. While the Transformer algorithm was certainly groundbreaking, I believe we cannot overlook the 2020 paper by OpenAI researchers titled "Scaling Laws for Neural Language Models."[2] They discovered that language model performance has predictable relationships with model size, dataset size and computing resources.

Looking at these graphs, there's something remarkable — when plotted on logarithmic scales, the relationships between compute, dataset size, parameters and test loss all form straight lines. This means performance improvements follow power laws: double the compute or data and you get a predictable improvement in performance.

Why is this important? Because from this moment, AI development transformed from guesswork to systematic engineering — building larger models was no longer speculation but a calculable investment. We could now predict: "If we increase our model size by 10x, performance will improve by this much."

The scaling laws made it clear that investing in larger models would yield predictable returns, which attracted massive investments from tech companies and venture capitalists alike. This funding enabled OpenAI to develop GPT-3 and then ChatGPT. Soon after, major technology companies entered the competition, and the AI renaissance began.

The next aha moment: Connecting AI agents

What's often referred to as the next aha moment is the emergence of AI agents and the protocols that connect them. If ChatGPT showed us what AI intelligence looks like, AI agents are bringing that intelligence into every domain of our lives. They're the carriers that transport this new intelligence from the chatbox into the real world – planning, executing and taking responsibility for actual tasks.

One key element in this transformation is something called Model Context Protocol (MCP), recently announced by Anthropic.[3] Think of it as a universal language for AI agents. Just as USB created a standard that allowed any device to connect to any computer, MCP enables AI agents to communicate with each other and with various tools and databases.

Until now, each AI system has functioned as an isolated island. MCP changes this by enabling AI agents to interact seamlessly, with multiple specialized agents working together to solve complex problems. The implications for medicine are particularly profound. AI agents could help coordinate complex research projects by analyzing literature, identifying patterns in clinical data and suggesting new research directions. Or consider AI systems that help doctors navigate the growing volume of medical research and stay current with relevant findings.

The field is moving from "How large can we build it?" to "How can we make them work together?" Just as the internet connected computers to create the World Wide Web, MCP is connecting AI agents to create what some are calling an "agent economy."

Living in a country of geniuses

Taking this one step further, Dario Amodei from Anthropic has said we'll soon be living in a "country of geniuses."[4] It's crucial to understand that these AI agents we've been discussing aren't just smart assistants — they possess the kind of intelligence that wins Nobel Prizes. They are, in essence, the "geniuses" Amodei refers to.

These AI systems will demonstrate abilities matching or exceeding top experts in many fields — biology, programming, mathematics, engineering, writing. What makes this truly revolutionary is that these AI agents won't exist as single entities. They will function as millions of instances that can form specialized teams instantly. With protocols like MCP, they can coordinate their efforts, share discoveries, and build on each other's work in real-time.

The human dimension in the AI era

What does this mean for us humans in this rapidly changing landscape? Geoffrey Hinton, recipient of the 2024 Nobel Prize in Physics, offers this perspective: "It will be comparable with the industrial revolution. But instead of exceeding people in physical strength, it's going to exceed people in intellectual ability. We have no experience of what it's like to have things smarter than us." Yet he also recognizes tremendous opportunity: "This new form of AI excels at modeling human intuition rather than human reasoning and it will enable us to create highly intelligent and knowledgeable assistants who will increase productivity in almost all industries."[5]

Medicine is experiencing profound transformation. Demis Hassabis, who received the Nobel Prize in Chemistry for AlphaFold, stated: "I've dedicated my career to advancing AI because of its unparalleled potential to improve the lives of billions of people. AlphaFold has already been used by more than two million researchers to advance critical work, from enzyme design to drug discovery."[6]

According to a recent report, the expression, "I alphafolded it," has become commonplace in structural biology laboratories.[7] This linguistic evolution, transforming a noun into a verb, much like "I googled it", reveals the depth of the revolution occurring in biological sciences.

Three human abilities AI cannot possess

This question of human uniqueness became especially clear to me while preparing for a recent conference talk on "Human-AI Collaboration in the Future of Work," with a special focus on health care. Through that reflection, I've come to believe our irreplaceable value lies in three abilities:

- Story: Technical perfection is becoming commoditized. With AI's help, this becomes even easier. In an era where everyone can produce perfect products, a compelling narrative wins. Like how grandmother's soybean paste stew always tastes better than the restaurant's version, even with identical ingredients, it's her story and love that make the difference. The person who can weave a unique story incorporating their distinct experiences, purpose, or philosophy will succeed.

- Seeking: As AI simplifies finding answers, it's as if elevators have been installed on every mountain. If elevators are installed on every mountain, choosing which mountain to climb becomes important. The capacity to formulate the right questions becomes crucial. The breakthrough emerges from posing fundamentally different questions. While AI can answer our questions brilliantly, identifying which problems are truly important and should be tackled first remains uniquely human.

- Sincerity: AI can propose optimal solutions, but there are things only humans can do and exchange with one another. The reassuring words from a doctor treating patients, the caring touch of a nurse, the genuine dedication of teachers toward children — these carry a different quality than machine perfection.

Your AI aha moment is waiting

Throughout this series, we've traced the aha moments that brought us here: from backpropagation to scaling laws, from AlphaFold to connected AI agents.

The most important aha moment is still ahead. It comes when we grasp what story, seeking and sincerity mean in practice. This isn't just personal. In medicine, this understanding shapes how we approach complex challenges.

We can now process more data, spot more patterns and test more hypotheses. Yet the core challenge remains: knowing which patterns matter and which questions to ask next.

The real breakthroughs happen at the intersection. AI can scan thousands of images; we determine clinical significance. It can suggest treatment protocols. It can calculate probabilities; we navigate the human complexities beneath the statistics.

Our aha moment arrives when we see how these human abilities transform AI from sophisticated calculation to meaningful medicine. The question isn't what AI will do to healthcare, but how we'll use it to solve problems we couldn't tackle before.

In the next and final column of this series, I'll share how our research team has applied these principles to Alzheimer's research. We found that combining human insight with AI analysis revealed patterns and connections we might have missed otherwise.

References:

1. DeepSeek-R1 Technical Report (2025), https://arxiv.org/pdf/2501.12948

2. Kaplan, J., et al. (2020). "Scaling Laws for Neural Language Models.", https://arxiv.org/abs/2001.08361

3. Anthropic. (2024). "Introducing the Model Context Protocol.", https://www.anthropic.com/news/model-context-protocol

4. Amodei, D. (2024). "Machines of Loving Grace.", https://darioamodei.com/machines-of-loving-grace

5. Hinton, G. (2024). Nobel Prize Banquet Speech. NobelPrize.org. https://www.nobelprize.org/prizes/physics/2024/hinton/speech/

6. Google DeepMind. (2024). "Demis Hassabis & John Jumper awarded Nobel Prize in Chemistry." https://deepmind.google/discover/blog/demis-hassabis-john-jumper-awarded-nobel-prize-in-chemistry/

7. "One of the Biggest Problems in Biology Has Finally Been Solved." Nature Structural & Molecular Biology, https://pmc.ncbi.nlm.nih.gov/articles/PMC10702591/